Highlights:

- Artificial intelligence can exhibit biases due to several reasons, which can stem from the data used to train AI models and the design and implementation of the algorithms themselves.

Artificial Intelligence (AI) has surged in popularity, revolutionizing various sectors. However, its widespread adoption has also brought forth significant ethical concerns. As AI increasingly infiltrates sensitive domains such as hiring, criminal justice, and healthcare, bias, and fairness have ignited a fervent debate. Consequently, the scrutiny of AI and its outcomes has intensified.

At the heart of this discourse lies the pressing concern of artificial intelligence bias. Organizations must be acutely aware of the potential biases that can permeate AI systems to ensure they are not perpetuating discrimination or unfairness. Understanding the intricacies of AI bias becomes paramount as we strive to develop solutions that foster the creation of unbiased AI systems.

In this blog, we will embark on a journey to unravel the concept of bias in artificial intelligence, exploring its causes and implications. We will delve into the imperative of vigilance, highlighting the measures necessary to identify, prevent, and rectify biases, ultimately nurturing a more inclusive and ethically sound AI landscape.

What Is Artificial Intelligence Bias?

Machine learning and artificial intelligence bias, also known as algorithm bias, refers to the tendency of algorithms to reflect human preferences.

So what is bias in artificial intelligence? Artificial intelligence bias refers to the phenomenon where artificial intelligence systems produce results or make decisions that systematically and unfairly disadvantage specific individuals or groups based on characteristics such as race, gender, age, or socioeconomic status.

This bias can occur due to various factors, including biased training data, algorithmic design flaws, or inherent biases in the data used to train AI models. It can result in discriminatory outcomes, reinforce societal biases, and perpetuate unfair treatment. Identifying and addressing AI bias is crucial to ensure fairness, transparency, and ethical use of AI technologies.

Examples of bias in artificial intelligence include algorithms that disproportionately reject loan applications from marginalized communities or facial recognition systems that exhibit higher error rates for particular racial or ethnic groups. By implementing comprehensive data collection, diverse training sets, rigorous testing, and ongoing monitoring, organizations can mitigate bias and foster a more inclusive and ethical AI landscape.

Understanding the intricacies of AI bias is crucial in unraveling its impact and implications. By delving into the question of “What is artificial intelligence bias?” and comprehending its underlying mechanisms, we can shed light on why artificial intelligence tends to exhibit biases in the first place.

Why Is Artificial Intelligence Biased?

Artificial intelligence bias arises from the human element involved in selecting the data used by algorithms and determining the application of their results. When extensive testing and diverse teams are lacking, unconscious biases can easily infiltrate machine learning models.

Consequently, AI systems automate and perpetuate these biased models. For instance, bias in facial recognition algorithms can result in higher error rates for specific racial or ethnic groups, leading to discriminatory consequences. Similarly, biased algorithms used in criminal justice systems may disproportionately impact marginalized communities, perpetuating existing biases and inequalities. To combat AI bias, fostering diverse and inclusive teams, conducting rigorous testing, and implementing transparency and accountability measures in developing and deploying AI systems are crucial.

Artificial intelligence can exhibit biases due to several reasons, which can stem from the data used to train the models, their design, and the implementation of the AI algorithms.

Here are some key factors contributing to bias in artificial intelligence:

- Biased training data

- Data collection process

- Algorithmic design

- Lack of diversity in development teams

- Limited or incorrect feedback

By actively mitigating the above factors, organizations can work towards reducing bias and ensuring that AI systems operate fairly and unbiasedly.

Understanding the causes of AI bias provides a foundation for implementing practices to reduce and mitigate bias in artificial intelligence, fostering fairness and ethical decision-making.

Practices for Reducing Bias in Artificial Intelligence

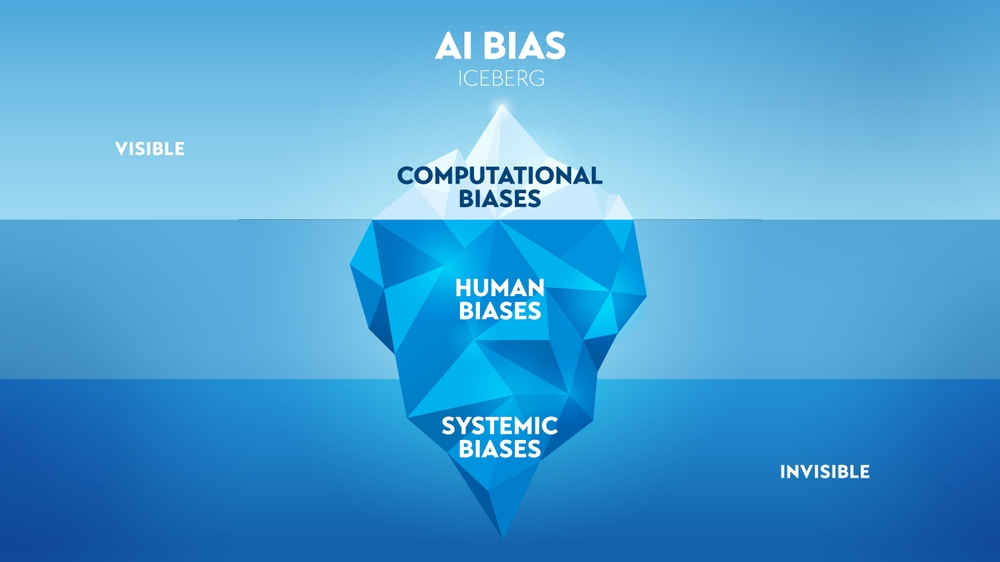

AI can be influenced by three types of bias: systemic, human, and computational. By analyzing and understanding these distinct forms of bias, we can effectively tackle each and establish a solid ethical foundation. This approach allows us to address bias comprehensively and construct a resilient framework that ensures the ethical use of AI.

Here is a detailed explanation of how to make artificial intelligence less biased.

Overcoming Systemic Bias through Value-Centric Approaches

Ensuring fairness, equity, and ethical use of AI technologies necessitates addressing systemic bias. Systemic bias encompasses biases deeply entrenched within the very fabric of AI development, deployment, and decision-making processes. Several crucial factors must be carefully considered and acted upon to tackle this pervasive issue.

It entails various actions such as inclusive and varied data collection, rigorous assessment, ethical frameworks, methods to mitigate bias, inclusive teams, and ongoing monitoring. By actively addressing systemic bias, we can establish AI systems that are fair and responsible, ensuring that the development, deployment, and decision-making processes of AI align with societal values and human rights principles. Promoting fairness, transparency, and accountability in AI systems is crucial, achieved through identifying, assessing, and mitigating biases.

Developing diverse and inclusive teams aids in identifying and addressing biases that may have been overlooked, while continuous monitoring and assessment help rectify biases in real-world applications. By implementing these measures, organizations can cultivate equitable and inclusive AI systems that minimize potential harms associated with biased AI technologies.

Combatting Human Bias through Transparent Practices

Human bias arises when individuals rely on their assumptions and interpretations to fill in any gaps in information that may be lacking.

Addressing human bias in AI is critical to mitigating artificial intelligence bias systems. Human bias can unintentionally seep into AI models through biased data selection, biased interpretation of data, or biased decision-making during algorithm design.

Strive for increased transparency in your algorithms. By gaining a deeper understanding of the reasons behind the outcomes produced by your models, you will be better equipped to identify and eliminate biases.

Businesses need to make efforts towards transparency by clearly explaining the essential inputs utilized by their AI models and the rationale behind them. Additionally, companies can enhance transparency by subjecting their systems to external audits conducted by impartial third parties. The involvement of additional perspectives can effectively intercept biases before they reach the end users.

Organizations must promote awareness and education about bias among AI developers and stakeholders to ensure they understand the potential impact and consequences of bias in AI systems. Diverse and inclusive teams should be involved in developing and training AI models to bring different perspectives and mitigate the influence of individual preferences.

Mitigate Computational Bias Pristine Data

When it comes to bias in artificial intelligence, the most obvious and widely discussed issue revolves around the presence of bias in the training data, known as computational bias. It is crucial to carefully tailor the AI system and training data to tackle this challenge. Instead of creating a general-purpose model, focus on a specific task for each AI bot to limit the potential for significant biases in the dataset.

When compiling the training data, it is essential to consciously remove biases as much as possible. This involves engaging with different parties involved and seeking input from end users to uncover any biases they may have experienced. Additionally, be vigilant about bias by proxy, which occurs when unintended bias is introduced through secondary characteristics connected to primary ones.

Ways to Prevent AI Bias

Bias in artificial intelligence is reduced and prevented by comparing and validating different training data samples for representativeness.

Below are the eight ways to prevent this bias from creeping into your business model:

- Define and narrow the business problem you are solving

- Structure data collection to accommodate varying points of view

- Understand your training data

- Form a diverse ML team to ask diverse questions

- Think about all your end-users

- Annotate with diversity

- Test and deploy with feedback in mind

- Make a concrete plan to improve your model based on the input

Conclusion

In conclusion, addressing AI bias is crucial to overcome challenges. Diverse data, robust evaluation, ethical frameworks, bias mitigation, inclusive teams, and continuous monitoring are key. Promoting fairness and transparency ensures accountable AI systems that align with societal values.